The sheer volume of logs, data lakes, and real-time information presents a major challenge, especially when managing Personally Identifiable Information (PII).

In this blog, we explore how integrating Streamdal with Logstash can up your log processing game, with a focus on PII redaction.

Streamdal: Centralizing PII Redaction Rules

The Opensource platform Streamdal enables us to centralize the management of PII redaction rules and transformations. By defining these rules in the Streamdal console UI, we can uniformly apply them across our entire log processing pipeline, ensuring consistency and compliance with privacy standards.

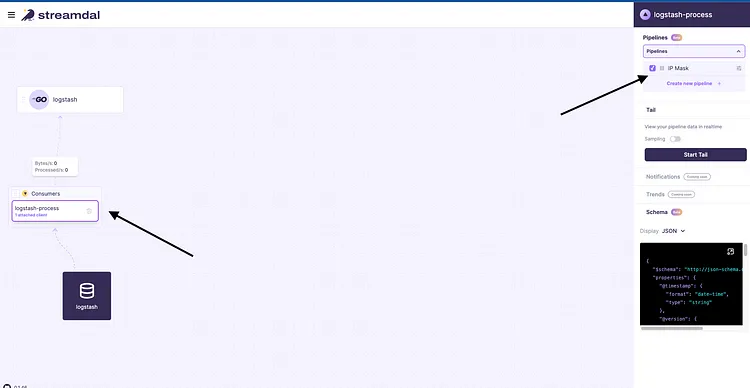

Streamdal dashboard

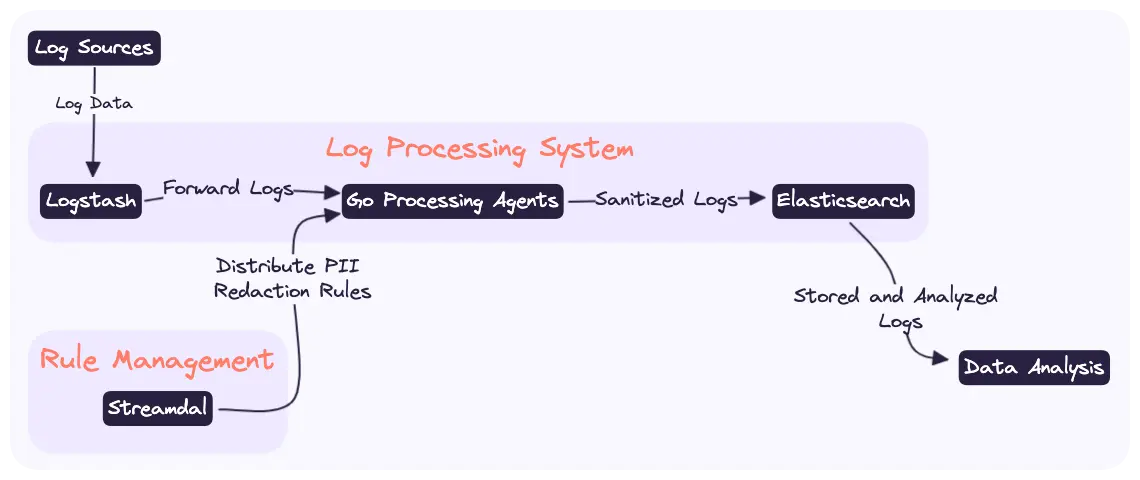

Log Processing Agent

We chose Go (Golang) to develop our own log processing agent. Go is well-suited for implementing complex, real-time data processing tasks. These agents are designed to identify and redact PII data from logs, ensuring that sensitive information is handled and stored securely.

The Log Processing Agent is an additional service you deploy alongside your Logstash agents. Which will receive processing rules from the Streamdal console UI, process events sent from Logstash, and then forward the processed logs back to Elasticsearch.

Architecture

Logstash and Elasticsearch

Once processed by our log-processor, these PII-sanitized logs are forwarded back to Logstash. From there, they enter Elasticsearch, our chosen engine for log storage and analysis. Elasticsearch provides the capability to store, search, and analyze large volumes of data, now with the added assurance that PII elements have been securely redacted.

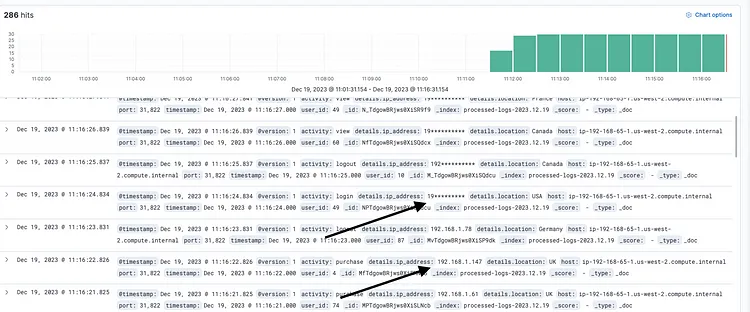

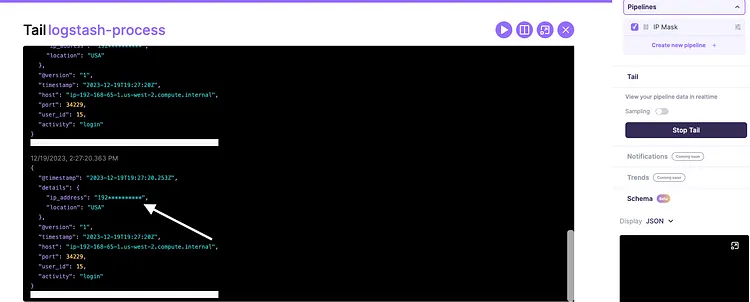

Masked PII

Deployment Overview

We briefly cover the deployment in the steps below. I have the full demo environment with Elasticsearch, Logstash, log-processor, and Kibana here.

- Deploy Streamdal in either Docker or Kubernetes.

- Deploy the Streamdal log processor. The example below is what your docker-compose might look like. See the log-processor repo for more details

log-processor:

container_name: go-app

image: streamdal/log-processor

environment:

- SERVER=streamdal-server:8082

- LISTEN_PORT=6000

- LOGSTASH_OUTPUT_PORT=logstash-server:7002

- STREAMDAL_TOKEN=1234

ports:

- "6000:6000"

- "7002:7002"- Configure Logstash to pass JSON data to the Streamdal log processor.

# Ingest data

input {

tcp {

port => 5044

codec => json_lines

}

}

# Pass to streamdal log-processor

output {

tcp {

host => "streamdal-log-processor"

port => 6000

codec => json_lines

}

}

# Pass back to logstash

input {

tcp {

port => 7002

codec => json_lines

}

}

# Store in Elasticsearch

output {

elasticsearch {

hosts => ["elasticsearch:9200"] # Assumes Elasticsearch service is named 'elasticsearch' in docker-compose

index => "processed-logs-%{+YYYY.MM.dd}" # Customize the index name as needed

}

}- Confirm the service is up and running in Streamdal console UI in your browser http://127.0.0.1:8080.

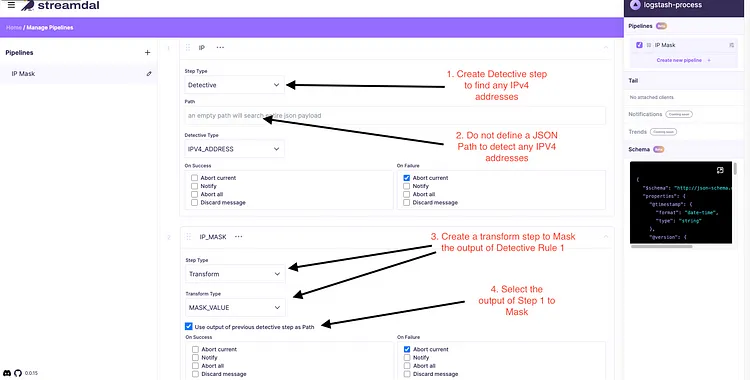

- Create a pipeline and attach it via the console UI. In this case, we are using the preexisting IPV4_ADDRESS rule to mask the output.

Create a Detective Rule To Mask PII IPV4 addresses

- Attach the Smart PII pipeline to your Logstash workflow.

- Use the tail function to confirm data is being masked.

- Confirm that the masked data is coming into your Logstash.

See It In Action

We’ve put together a video tutorial demonstrating automatic detection and masking of PII in action. Check out the video below!

Conclusion

With the log processor we developed, we’ve achieved a level of flexibility and control that was previously unattainable. Here are the key takeaways from our journey:

- Smart PII Rules: While Logstash has the concept of filters, they are functionally dumb rules that require handcrafting regex. We can now attach Detective rules to any workflow which will automatically scan for all matching PII and replace, remove, or notify on them.

- Dynamic Rule Deployment: Our solution allows us to distribute rules across all log-processing agents in real-time. This dynamic approach means we can adapt to new data patterns or regulatory requirements swiftly, without delays.

- Real-Time Monitoring and Metrics: The system provides the ability to monitor log metrics continuously. This feature ensures that we have our finger on the pulse of our data streams, allowing for proactive responses to emerging trends or anomalies.

- Creating and Managing Real-Time Pipelines: We now can construct and modify real-time pipelines. This functionality ensures that data processing and transformations occur before the data reaches its final destination in Elasticsearch. This is extremely important when it comes to meeting security requirements.

- Flexible Rule Management: The ability to attach or detach rules in real-time, without the need for restarts or manual reconfiguration, is a game-changer. It offers unparalleled adaptability in our log processing, ensuring that our systems remain agile and responsive to change.

- Seamless Integration and Scalability: Integrating Streamdal with our existing Logstash and Elasticsearch infrastructure was seamless. The scalability of this solution means it can grow with our needs, handling increasing volumes of data without sacrificing performance.

Want to nerd out with me and other misfits about your experiences with monorepos, deep-tech, or anything engineering-related?

Join our Discord, we’d love to have you!